In this article, I’ll explain how to use the Semantic Kernel and the OpenAI Vision API to perform two tasks:

- Explain why a meme is funny

- Identify an animal in an image and pass the results to another function that generates three interesting facts about the animal.

The examples in this article are intentionally simple. The goal of the article is to give you a good idea of the capabilities of the Semantic Kernel and the OpenAI Vision API. The plugin can be used for more complex tasks, such as figuring out whether a street intersection is accessible to wheelchairs,whether a person is wearing a mask, or to automatically generate accessible image descriptions.

The code for all examples can be found in the demo.py source file in the GitHub repository.

Explaining why memes are funny

In this first example, I will use the plugin to explain why a meme is funny. My code instantiates and calls the Vision plugin. The plugin has a single function, ApplyPromptToImage, which takes a prompt and an image URL as input, and returns the generated text. In my example, the prompt is simply Why is this meme funny?, and I pass an URL of an image of a meme.

kernel = sk.Kernel()

vision = kernel.import_skill(Vision())

meme_base_url = "https://raw.githubusercontent.com/lucas-a-meyer/lucas-a-meyer.github.io/main/images/"

meme_url_list = ["meme1.jpg", "meme2.png", "meme3.jpg"]

for url in meme_url_list:

variables = sk.ContextVariables()

variables['prompt'] = "Why is this meme funny?"

variables['url'] = meme_base_url + url

meme = await kernel.run_async(vision['ApplyPromptToImage'], input_vars=variables)

print(f"{meme}\n\n") Here are the results. The Vision API does a good job of understanding the image and generating a good explanation of why the meme is funny.

| Meme | Explanation |

|---|---|

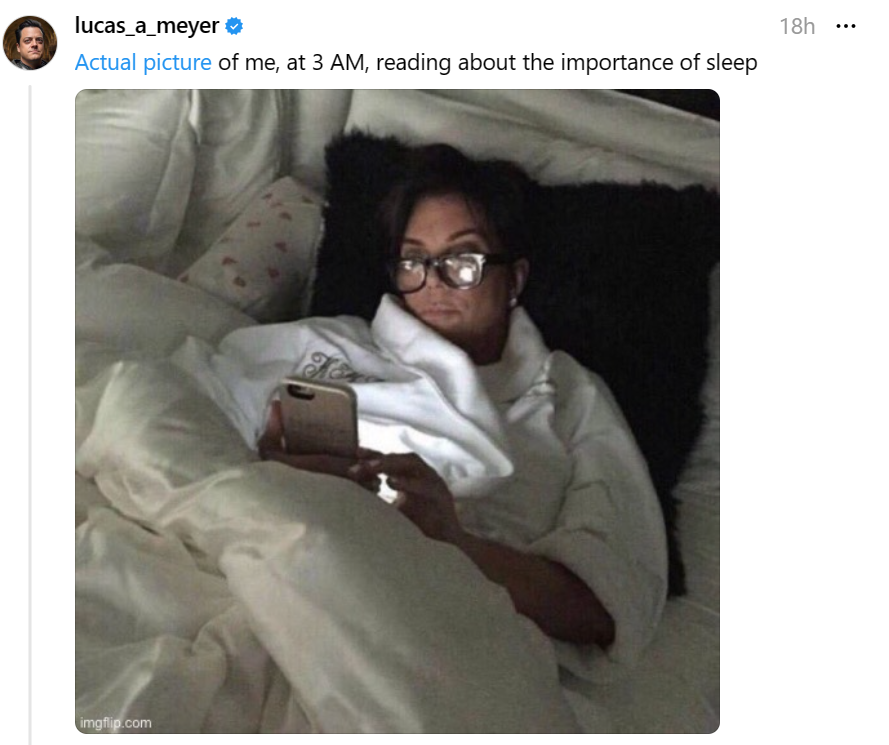

|

The humor in this meme comes from the irony and self-awareness of the situation. The person in the image is depicted as being awake at 3 AM, a time typically associated with sleep, while reading about the importance of sleep. The implication is that while they understand the value of sleep for health and well-being, they are not following this advice themselves. The relatable contradiction between what they’re doing (staying up late) and what they’re reading about (the importance of sleep) creates a humorous juxtaposition that many people can identify with, as it’s common for individuals to do things that go against their own better judgment or knowledge. |

|

This meme is humorous because it presents a situation where someone has comically circumvented a rule through a technicality. The text explains that while there’s a rule against having pumpkins in their dormitory, there’s no mention of pineapples. The images show a pineapple carved in a manner similar to a jack-o’-lantern, traditionally made from a pumpkin, often for Halloween. The humor arises from the person’s literal interpretation of the rules, exploiting the lack of specificity to create a loophole and do something in the spirit of what’s prohibited yet not technically against the rules. This kind of wit and playful rebellion against overly specific or seemingly arbitrary rules often resonates with people who enjoy clever ways to bend or skirt the rules without breaking them. |

|

The humor in this meme arises from the juxtaposition of an inspirational saying typically found in areas of the home where food is prepared or consumed—like the kitchen—and placing it in a completely inappropriate context—the bathroom. Specifically, the framed quote says, “LIFE IS SHORT LICK THE BOWL,” which in a culinary context encourages people to enjoy life to the fullest, perhaps by savoring every last bit of their food. However, when placed in the bathroom, right next to a toilet bowl, the phrase takes on a literal and unpleasantly comical meaning. The idea of licking a toilet bowl is both absurd and humorous, evoking a reaction due to the drastic shift in the context of the phrase. |

In the next section, I’ll explain how to use the plugin to identify an animal in an image and pass the results to another function.

Chaining the plugin answer to another function

In the example below, I first use the Vision plugin to identify an animal, and then use the results to ask for three interesting facts about an animal. I have createad a semantic function with the prompt Provide three interesting and unusual facts about the animal {{$input}} and called it AnimalFacts.

The URL I’m passing in the code below is a picture of an owl. The Vision API correctly identifies the animal as an owl, and the AnimalFacts function generates three interesting facts about owls.

In my example, I’m doing two steps. First I’m using the GPT-4 Vision API in the call that identifies the animal from the image, and then GPT-3.5 in the call that generates the facts.

It would be possible to solve the problem with a single call to the Vision API, but it can be expensive, so I get its results and pass it to a cheaper model for the text generation step.

url = "https://l.meyerperin.com/skv_owl"

gpt35 = OpenAIChatCompletion("gpt-3.5-turbo", api_key, org_id)

kernel.add_chat_service("gpt35", gpt35)

vision = kernel.import_skill(Vision())

plugins = kernel.import_semantic_skill_from_directory(".", "plugins")

variables = sk.ContextVariables()

variables['prompt'] = "What animal is this? Please respond in one word."

variables['url'] = url

animal = await kernel.run_async(vision['ApplyPromptToImage'], input_vars=variables)

facts = await kernel.run_async(plugins['AnimalFacts'], input_str=str(animal))

print(f"The animal from the picture is a {animal}")

print(f"{facts}\n\n")From the call above, I got the following result about owls:

1. Owls have specialized feathers with fringes of varying softness that help muffle

sound when they fly. Their broad wings and light bodies also make them practically

silent fliers, which helps them stalk prey more easily.

2. Unlike most birds, owls have both eyes facing forward which gives them better

depth perception for hunting.

3. Some species of owls, such as the Great Gray Owl, can hear a mouse moving

underneath a foot of snow from up to 60 feet away. Their ears are asymmetrical,

with one ear being higher than the other, which helps them locate sounds in

multiple dimensions.How to write native plugin to wrap the OpenAI API

In this section, I’ll explain all the steps involved in writing a Semantic Kernel native Python plugin to wrap the OpenAI API. I’ll use the Vision API as an example. The plugin code is in the GitHub repository.

from dotenv import load_dotenv

from openai import OpenAI

from semantic_kernel.skill_definition import sk_function, sk_function_context_parameter

from semantic_kernel.orchestration.sk_context import SKContext

import osWe need to import the OpenAI library, the SKContext class because our function has multiple parameters (the prompt and the image URL), and the decorators in skill_definition that will allow us to make the Python function visible to the semantic Kernel.

class Vision:

@sk_function(

description="""Asks the GPT-4 Vision API to perform an operation described by the prompt

on an image given its url""",

name="ApplyPromptToImage"

)

@sk_function_context_parameter(

name="prompt",

description="The prompt you want to send to the Vision API",

)

@sk_function_context_parameter(

name="url",

description="",

)The main code of the function consists in assembling a message that conforms to the Vision API specification. The message is then sent to the API, and the result is parsed and returned to the caller.

def ApplyPromptToImage(self, context: SKContext) -> str:

load_dotenv()

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

response = client.chat.completions.create(

model="gpt-4-vision-preview",

messages=[

{"role": "user", "content": [

{"type": "text", "text": f"{context['prompt']}"},

{"type": "image_url","image_url": {"url": f"{context['url']}",

},},],}],

max_tokens=300,

)

return response.choices[0].message.contentThe full code for the plugin and associated demo can be found in the GitHub repository.

Have I ever thought about creating an app that does this as a service?

Yes, and with the blog post above, you can easily create one yourself. It will probably take you less than an hour to get it working. The main challenge is to bill for it, as the Open AI Vision calls can get expensive very quickly.